|

|

||

|---|---|---|

| img | ||

| 4_bit_LLM_Quantization_with_GPTQ.ipynb | ||

| Decoding_Strategies_in_Large_Language Models.ipynb | ||

| Fine_tune_Llama_2_in_Google_Colab.ipynb | ||

| Fine_tune_a_Mistral_7b_model_with_DPO.ipynb | ||

| Improve_ChatGPT_with_Knowledge_Graphs.ipynb | ||

| Introduction_to_Weight_Quantization.ipynb | ||

| LICENSE | ||

| Mergekit.ipynb | ||

| Quantize_Llama_2_models_using_GGUF_and_llama_cpp.ipynb | ||

| Quantize_models_with_ExLlamaV2.ipynb | ||

| README.md | ||

| Visualizing_GPT_2's_Loss_Landscape.ipynb | ||

| nanoLoRA.ipynb | ||

README.md

🗣️ Large Language Model Course

Follow me on X • Blog • Hands-on GNN

The LLM course is divided into three parts:

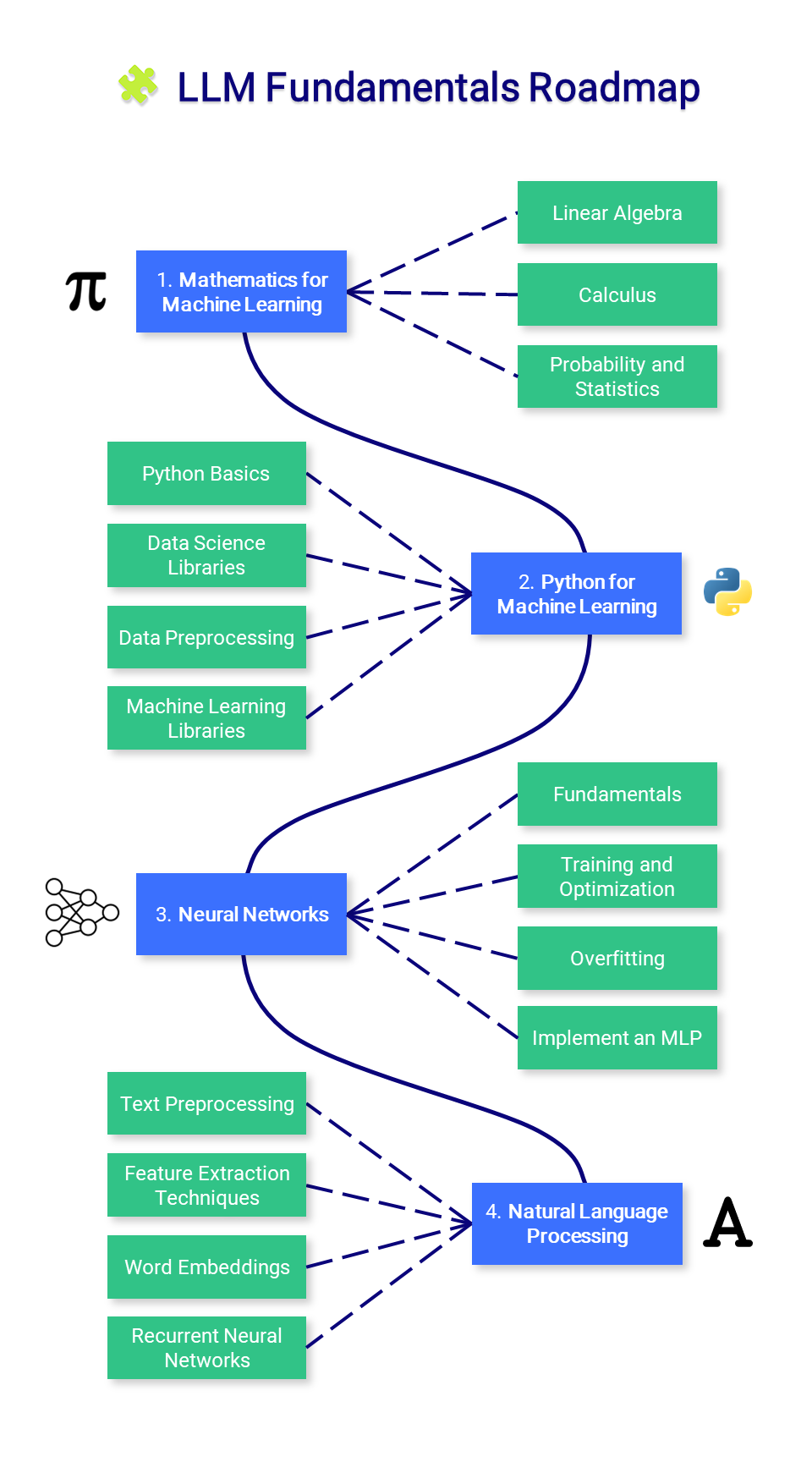

- 🧩 LLM Fundamentals covers essential knowledge about mathematics, Python, and neural networks.

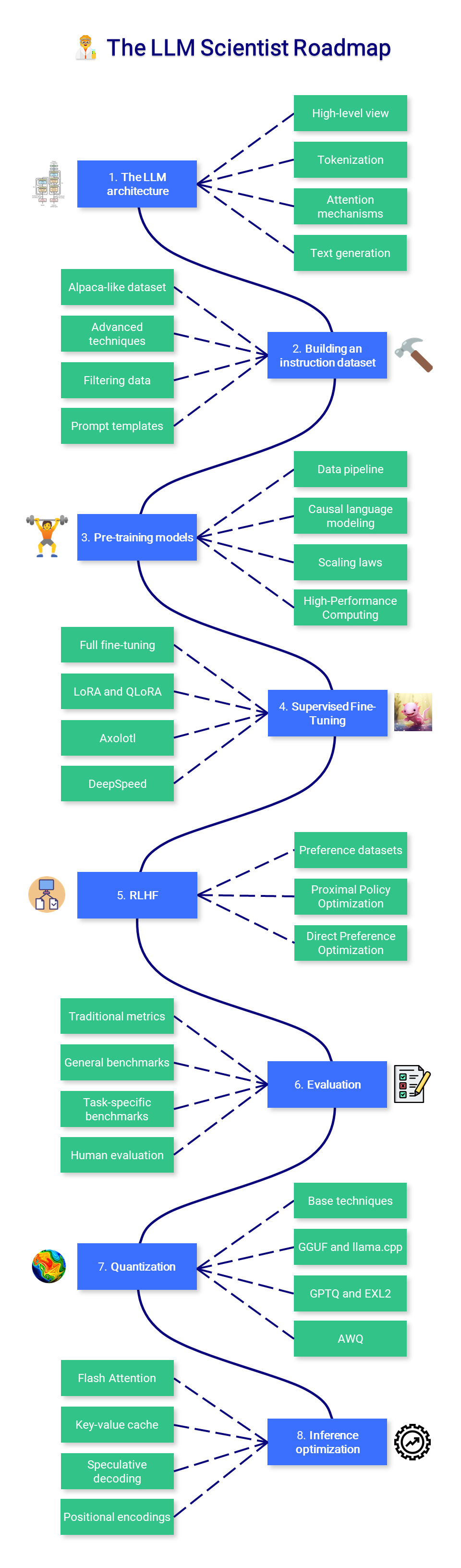

- 🧑🔬 The LLM Scientist focuses on building the best possible LLMs using the latest techniques.

- 👷 The LLM Engineer focuses on creating LLM-based applications and deploying them.

📝 Notebooks

A list of notebooks and articles related to large language models.

Tools

| Notebook | Description | Notebook |

|---|---|---|

| 🧐 LLM AutoEval | Automatically evaluate your LLMs using RunPod |  |

| 🥱 LazyMergekit | Easily merge models using mergekit in one click. |  |

| ⚡ AutoGGUF | Quantize LLMs in GGUF format in one click. |  |

Fine-tuning

| Notebook | Description | Article | Notebook |

|---|---|---|---|

| Fine-tune Llama 2 in Google Colab | Step-by-step guide to fine-tune your first Llama 2 model. | Article |  |

| Fine-tune LLMs with Axolotl | End-to-end guide to the state-of-the-art tool for fine-tuning. | Article | W.I.P. |

| Fine-tune a Mistral-7b model with DPO | Boost the performance of supervised fine-tuned models with DPO. | Article |  |

Quantization

| Notebook | Description | Article | Notebook |

|---|---|---|---|

| 1. Introduction to Weight Quantization | Large language model optimization using 8-bit quantization. | Article |  |

| 2. 4-bit LLM Quantization using GPTQ | Quantize your own open-source LLMs to run them on consumer hardware. | Article |  |

| 3. Quantize Llama 2 models with GGUF and llama.cpp | Quantize Llama 2 models with llama.cpp and upload GGUF versions to the HF Hub. | Article |  |

| 4. ExLlamaV2: The Fastest Library to Run LLMs | Quantize and run EXL2 models and upload them to the HF Hub. | Article |  |

Other

| Notebook | Description | Article | Notebook |

|---|---|---|---|

| Decoding Strategies in Large Language Models | A guide to text generation from beam search to nucleus sampling | Article |  |

| Visualizing GPT-2’s Loss Landscape | 3D plot of the loss landscape based on weight perturbations. | Tweet |  |

| Improve ChatGPT with Knowledge Graphs | Augment ChatGPT’s answers with knowledge graphs. | Article |  |

🧩 LLM Fundamentals

1. Mathematics for Machine Learning

Before mastering machine learning, it is important to understand the fundamental mathematical concepts that power these algorithms.

- Linear Algebra: This is crucial for understanding many algorithms, especially those used in deep learning. Key concepts include vectors, matrices, determinants, eigenvalues and eigenvectors, vector spaces, and linear transformations.

- Calculus: Many machine learning algorithms involve the optimization of continuous functions, which requires an understanding of derivatives, integrals, limits, and series. Multivariable calculus and the concept of gradients are also important.

- Probability and Statistics: These are crucial for understanding how models learn from data and make predictions. Key concepts include probability theory, random variables, probability distributions, expectations, variance, covariance, correlation, hypothesis testing, confidence intervals, maximum likelihood estimation, and Bayesian inference.

📚 Resources:

- 3Blue1Brown - The Essence of Linear Algebra: Series of videos that give a geometric intuition to these concepts.

- StatQuest with Josh Starmer - Statistics Fundamentals: Offers simple and clear explanations for many statistical concepts.

- AP Statistics Intuition by Ms Aerin: List of Medium articles that provide the intuition behind every probability distribution.

- Immersive Linear Algebra: Another visual interpretation of linear algebra.

- Khan Academy - Linear Algebra: Great for beginners as it explains the concepts in a very intuitive way.

- Khan Academy - Calculus: An interactive course that covers all the basics of calculus.

- Khan Academy - Probability and Statistics: Delivers the material in an easy-to-understand format.

2. Python for Machine Learning

Python is a powerful and flexible programming language that’s particularly good for machine learning, thanks to its readability, consistency, and robust ecosystem of data science libraries.

- Python Basics: Python programming requires a good understanding of the basic syntax, data types, error handling, and object-oriented programming.

- Data Science Libraries: It includes familiarity with NumPy for numerical operations, Pandas for data manipulation and analysis, Matplotlib and Seaborn for data visualization.

- Data Preprocessing: This involves feature scaling and normalization, handling missing data, outlier detection, categorical data encoding, and splitting data into training, validation, and test sets.

- Machine Learning Libraries: Proficiency with Scikit-learn, a library providing a wide selection of supervised and unsupervised learning algorithms, is vital. Understanding how to implement algorithms like linear regression, logistic regression, decision trees, random forests, k-nearest neighbors (K-NN), and K-means clustering is important. Dimensionality reduction techniques like PCA and t-SNE are also helpful for visualizing high-dimensional data.

📚 Resources:

- Real Python: A comprehensive resource with articles and tutorials for both beginner and advanced Python concepts.

- freeCodeCamp - Learn Python: Long video that provides a full introduction into all of the core concepts in Python.

- Python Data Science Handbook: Free digital book that is a great resource for learning pandas, NumPy, Matplotlib, and Seaborn.

- freeCodeCamp - Machine Learning for Everybody: Practical introduction to different machine learning algorithms for beginners.

- Udacity - Intro to Machine Learning: Free course that covers PCA and several other machine learning concepts.

3. Neural Networks

Neural networks are a fundamental part of many machine learning models, particularly in the realm of deep learning. To utilize them effectively, a comprehensive understanding of their design and mechanics is essential.

- Fundamentals: This includes understanding the structure of a neural network such as layers, weights, biases, activation functions (sigmoid, tanh, ReLU, etc.)

- Training and Optimization: Familiarize yourself with backpropagation and different types of loss functions, like Mean Squared Error (MSE) and Cross-Entropy. Understand various optimization algorithms like Gradient Descent, Stochastic Gradient Descent, RMSprop, and Adam.

- Overfitting: Understand the concept of overfitting (where a model performs well on training data but poorly on unseen data) and learn various regularization techniques (dropout, L1/L2 regularization, early stopping, data augmentation) to prevent it.

- Implement a Multilayer Perceptron (MLP): Build an MLP, also known as a fully connected network, using PyTorch.

📚 Resources:

- 3Blue1Brown - But what is a Neural Network?: This video gives an intuitive explanation of neural networks and their inner workings.

- freeCodeCamp - Deep Learning Crash Course: This video efficiently introduces all the most important concepts in deep learning.

- Fast.ai - Practical Deep Learning: Free course designed for people with coding experience who want to learn about deep learning.

- Patrick Loeber - PyTorch Tutorials: Series of videos for complete beginners to learn about PyTorch.

4. Natural Language Processing (NLP)

NLP is a fascinating branch of artificial intelligence that bridges the gap between human language and machine understanding. From simple text processing to understanding linguistic nuances, NLP plays a crucial role in many applications like translation, sentiment analysis, chatbots, and much more.

- Text Preprocessing: Learn various text preprocessing steps like tokenization (splitting text into words or sentences), stemming (reducing words to their root form), lemmatization (similar to stemming but considers the context), stop word removal, etc.

- Feature Extraction Techniques: Become familiar with techniques to convert text data into a format that can be understood by machine learning algorithms. Key methods include Bag-of-words (BoW), Term Frequency-Inverse Document Frequency (TF-IDF), and n-grams.

- Word Embeddings: Word embeddings are a type of word representation that allows words with similar meanings to have similar representations. Key methods include Word2Vec, GloVe, and FastText.

- Recurrent Neural Networks (RNNs): Understand the working of RNNs, a type of neural network designed to work with sequence data. Explore LSTMs and GRUs, two RNN variants that are capable of learning long-term dependencies.

📚 Resources:

- RealPython - NLP with spaCy in Python: Exhaustive guide about the spaCy library for NLP tasks in Python.

- Kaggle - NLP Guide: A few notebooks and resources for a hands-on explanation of NLP in Python.

- Jay Alammar - The Illustration Word2Vec: A good reference to understand the famous Word2Vec architecture.

- Jake Tae - PyTorch RNN from Scratch: Practical and simple implementation of RNN, LSTM, and GRU models in PyTorch.

- colah’s blog - Understanding LSTM Networks: A more theoretical article about the LSTM network.

🧑🔬 The LLM Scientist

1. The LLM architecture

While an in-depth knowledge about the Transformer architecture is not required, it is important to have a good understanding of its inputs (tokens) and outputs (logits). The vanilla attention mechanism is another crucial component to master, as improved versions of it are introduced later on.

- High-level view: Revisit the encoder-decoder Transformer architecture, and more specifically the decoder-only GPT architecture, which is used in every modern LLM.

- Tokenization: Understand how to convert raw text data into a format that the model can understand, which involves splitting the text into tokens (usually words or subwords).

- Attention mechanisms: Grasp the theory behind attention mechanisms, including self-attention and scaled dot-product attention, which allows the model to focus on different parts of the input when producing an output.

- Text generation: Learn about the different ways the model can generate output sequences. Common strategies include greedy decoding, beam search, top-k sampling, and nucleus sampling.

📚 References: - The Illustrated Transformer by Jay Alammar: A visual and intuitive explanation of the Transformer model. - The Illustrated GPT-2 by Jay Alammar: Even more important than the previous article, it is focused on the GPT architecture, which is very similar to Llama’s. - LLM Visualization by Brendan Bycroft: Incredible 3D visualization of what happens inside of an LLM. * nanoGPT by Andrej Karpathy: A 2h-long YouTube video to reimplement GPT from scratch (for programmers). * Attention? Attention! by Lilian Weng: Introduce the need for attention in a more formal way. * Decoding Strategies in LLMs: Provide code and a visual introduction to the different decoding strategies to generate text.

3. Pre-training models

Pre-training is a very long and costly process, which is why this is not the focus of this course. It’s good to have some level of understanding of what happens during pre-training, but hands-on experience is not required.

- Data pipeline: Pre-training requires huge datasets (e.g., Llama 2 was trained on 2 trillion tokens) that need to be filtered, tokenized, and collated with a pre-defined vocabulary.

- Causal language modeling: Learn the difference between causal and masked language modeling, as well as the loss function used in this case. For efficient pre-training, learn more about Megatron-LM or gpt-neox.

- Scaling laws: The scaling laws describe the expected model performance based on the model size, dataset size, and the amount of compute used for training.

- High-Performance Computing: Out of scope here, but more knowledge about HPC is fundamental if you’re planning to create your own LLM from scratch (hardware, distributed workload, etc.).

📚 References: * LLMDataHub by Junhao Zhao: Curated list of datasets for pre-training, fine-tuning, and RLHF. * Training a causal language model from scratch by Hugging Face: Pre-train a GPT-2 model from scratch using the transformers library. * TinyLlama by Zhang et al.: Check this project to get a good understanding of how a Llama model is trained from scratch. * Causal language modeling by Hugging Face: Explain the difference between causal and masked language modeling and how to quickly fine-tune a DistilGPT-2 model. * Chinchilla’s wild implications by nostalgebraist: Discuss the scaling laws and explain what they mean to LLMs in general. * BLOOM by BigScience: Notion page that describes how the BLOOM model was built, with a lot of useful information about the engineering part and the problems that were encountered. * OPT-175 Logbook by Meta: Research logs showing what went wrong and what went right. Useful if you’re planning to pre-train a very large language model (in this case, 175B parameters). * LLM 360: A framework for open-source LLMs with training and data preparation code, data, metrics, and models.

5. Reinforcement Learning from Human Feedback

After supervised fine-tuning, RLHF is a step used to align the LLM’s answers with human expectations. The idea is to learn preferences from human (or artificial) feedback, which can be used to reduce biases, censor models, or make them act in a more useful way. It is more complex than SFT and often seen as optional.

- Preference datasets: These datasets typically contain several answers with some kind of ranking, which makes them more difficult to produce than instruction datasets.

- Proximal Policy Optimization: This algorithm leverages a reward model that predicts whether a given text is highly ranked by humans. This prediction is then used to optimize the SFT model with a penalty based on KL divergence.

- Direct Preference Optimization: DPO simplifies the process by reframing it as a classification problem. It uses a reference model instead of a reward model (no training needed) and only requires one hyperparameter, making it more stable and efficient.

📚 References: * An Introduction to Training LLMs using RLHF by Ayush Thakur: Explain why RLHF is desirable to reduce bias and increase performance in LLMs. * Illustration RLHF by Hugging Face: Introduction to RLHF with reward model training and fine-tuning with reinforcement learning. * StackLLaMA by Hugging Face: Tutorial to efficiently align a LLaMA model with RLHF using the transformers library. * LLM Training: RLHF and Its Alternatives by Sebastian Rashcka: Overview of the RLHF process and alternatives like RLAIF. * Fine-tune Mistral-7b with DPO: Tutorial to fine-tune a Mistral-7b model with DPO and reproduce NeuralHermes-2.5.

7. Quantization

Quantization is the process of converting the weights (and activations) of a model using a lower precision. For example, weights stored using 16 bits can be converted into a 4-bit representation. This technique has become increasingly important to reduce the computational and memory costs associated with LLMs.

- Base techniques: Learn the different levels of precision (FP32, FP16, INT8, etc.) and how to perform naïve quantization with absmax and zero-point techniques.

- GGUF and llama.cpp: Originally designed to run on CPUs, llama.cpp and the GGUF format have become the most popular tools to run LLMs on consumer-grade hardware.

- GPTQ and EXL2: GPTQ and, more specifically, the EXL2 format offer an incredible speed but can only run on GPUs. Models also take a long time to be quantized.

- AWQ: This new format is more accurate than GPTQ (lower perplexity) but uses a lot more VRAM and is not necessarily faster.

📚 References: * Introduction to quantization: Overview of quantization, absmax and zero-point quantization, and LLM.int8() with code. * Quantize Llama models with llama.cpp: Tutorial on how to quantize a Llama 2 model using llama.cpp and the GGUF format. * 4-bit LLM Quantization with GPTQ: Tutorial on how to quantize an LLM using the GPTQ algorithm with AutoGPTQ. * ExLlamaV2: The Fastest Library to Run LLMs: Guide on how to quantize a Mistral model using the EXL2 format and run it with the ExLlamaV2 library. * Understanding Activation-Aware Weight Quantization by FriendliAI: Overview of the AWQ technique and its benefits.

Acknowledgements

This roadmap was inspired by the excellent DevOps Roadmap from Milan Milanović and Romano Roth.

Special thanks to Thomas Thelen for motivating me to create a roadmap, and André Frade for his input and review of the first draft.

Disclaimer: I am not affiliated with any sources listed here.